Compressing Unit Vectors into 16-bits

Some years ago I posted an article about how to compress unit vectors, or normals, efficiently into 16 bits. The original article is getting harder to find, so I’m reposting a version of it here.

Since the original article this method has been used in a number of game engines, the largest being Valve’s source engine. There are methods that have a higher accuracy, but I believe this method is still best in terms of very good accuracy for a very low computational cost.

Vectors and Normals

In graphics, or physics modeling we often have the need to represent things using 3 dimensional vectors. 3D vectors are normally represented as three floating point quantities. With single precision floats your vectors will typically be 12 bytes. These full vectors can represent either a point in 3D space, or a direction and a magnitude. For these applications you will need the full vector.

But for many applications you only need to encode a direction. For this you typically use vectors of length 1, or unit vectors. When they are used to encode the direction that some surface is facing they are called normals. In graphics, matrices used to represent orientations, or affine matrices used for transformations, are composed of 3 or 4 unit vectors.

Below I describe a method to compress these vectors down to just 2 bytes, with pretty good accuracy. This is a great method to use for any application where you need a large number of surface normals and where memory and computation is at a premium. This would also be useful to store the surface normals of textures for bump mapping algorithms, especially when writing shaders. It might also be useful anywhere you have large numbers of transforms, such as an animation system.

Performance

First lets look at what my compressed normals look like when used for lighting.

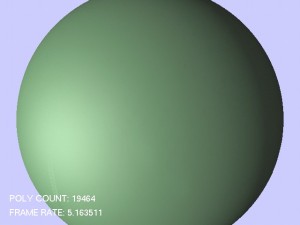

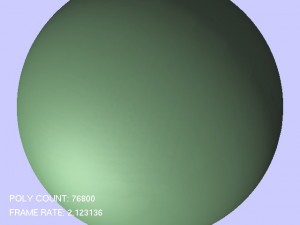

In these two images I have a sphere represented with about 40000 triangles. In the first picture I am using complete 12 byte normals. In the second picture the normals used to light the sphere are quantized 2-byte normals. ( click on the image to enlarge it )

Notice how the first picture is perfect sphericity itself. But the second picture is not too bad. The surface has a very slight wobble to it. Now remember that these pictures represent the worst case for using normals to calculate lighting. The sphere is lit obliquely and the surface is a nice smooth continuous curve. In the typical case of a 3D model, or a terrain, this quantization error is absolutely invisible.

The Method

We can break the method down into two parts:

-

1. Reducing the number of values we have to store

2. Reducing the resolution of the values that we store, or “quantizing” them.

Bit Packing

If you know that your vector is unit length then you know that:

X2 + Y2 + Z2 = 1

Therefore you know that if you have 2 numbers you can compute the magnitude of the third number, but not its sign. So you only need to store:

-

1. The sign of each element

2. Just two of the three components of the vector ( say X and Y )

Given that I am going to pack this whole thing into 16 bits, I use 3 bits for the sign of each vector component, leaving me 13 bits to represent the magnitude of two components.

You also know that the magnitude of any component ranges from 0 to 1 so it makes quantization practical.

It would be simple to just code one value into 6 bits and another value into the remaining 7 bits and just use those two numbers to look up the third value in a table. However it is possible to express both numbers using seven bits each but using a total of only 13 bits for both. This is because when you select two components of a unit vector, not every pair of numbers less than 1 is possible. For example if X == 1.0 then Y and Z must both be 0. As X becomes smaller more values become possible for Y and Z. If you make your quantization table a 2d table indexed on the square of X and the square of Y, then the cells of the table that can be filled with valid values for Z form a triangle. You can see that we are using only half of the space. Which is great because I am short one bit!

So say that I will represent X with 7 bits, and Y with 6 bits and look up Z in my table. To get a 7th bit of resolution for Y I just check to see if my quantized Y would be bigger than the dynamic range of 6 bits. In that case I subtract both numbers from 127. Now the X still fits in the X slot and the Y is guaranteed to fit in the Y slot and the pair of numbers will index into a part of the table that is still not coded with values for Z.

How you map the values of X and Y into quantized values will determine a great deal about the quality of the normals. A naive method would be just to multiply each value by 127 and round to the nearest whole number and in fact this is just what I did for the first implementation of this algorithm. But there is a better way to quantize these values.

Improved Quantization

If you just multiply the values of X and Y by 127, then you are projecting a sphere onto the XY plane. Therefore the quantization method is pretty good in places where the sphere surface is not perpendicular to the XY plane, but gets progressively worse as the surface becomes perpendicular to the projection plane. You can see in the diagram that normals in the red area map to a much smaller part of the plane than normals in the green area. More specifically adding 2 bits to the sample size generally increases the accuracy by a factor 4 but near the edges the accuracy increases only twice.

One way to avoid this effect is to use another type of projection. By selecting a radial projection on the plane that goes through points X0=(1,0,0),Y0={0,1,0) and Z0=(0,0,1). That is, one casts a ray from the origin to the given point on the sphere and finds the intersection between the ray and the plane. To simplify things even further the new method uses a non-orthogonal coordinate system on the plane such that X0->(0,0), Y0->(1,0), Z0->(0,1)

Since we only consider the octant where x,y,z > 0, the sphere is nowhere perpendicular to the plane. In fact the maximum angle between the sphere and the plane is around 30 degrees. In this case the projection is everywhere nice and regular and each bit of the sample always corresponds to a 2-fold increase in accuracy. Calculations show that the spread between the maximum accuracy and the minimal accuracy is only about 5 times.

The formula for the projective transform is:

zbits = 1/3 * (1 – (x+y-2*z)/(x+y+z))

ybits = 1/3 * (1 – (x-2*y+z)/(x+y+z))

Which we can reduce to:

zbits = z / ( x + y + z )

ybits = y / ( x + y + z )

The ybits and zbits are now between 0 and 1, and so is ( ybits + zbits ). Thus we can apply a similar bit-packing method as in the original algorithm. In the original method since the diagonal of the table was all zero, we could quantize two values, x and y, from 0-127, since the diagonal could be shared in the two encodings. Since in this mapping the diagonal varies, we have to quantize two values ybits and zbits from 0-126 so that we code nothing on the diagonal.

To unpack we use the reverse transform and then normalize the vector so it again lies on the sphere. Since normalization is just a scaling by some number, we can pre-tabulate the amount of required scaling for each u and v and thus avoid costly divisions and square roots. Logically what we do is:

scaling = scalingTable[zbits][ybits] v.z = zbits * scaling v.y = ybits * scaling v.x = (1 - zbits - ybits ) * scaling

There is another way to understand this projection. We know the length of unit vectors is always 1, so we don’t have to store that information. We can store any vector that is co-linear with the normal and the quantized vector and then just normalize the vector once we unpack it ( by multiplying it with a stored normalization constant ). So all we need to do is store the ratio of say x/(x+y+z) and y/(x+y+z) as our two quantized values. Any ratios that allow us to reconstruct the proportions of x,y,z will do. Since we are quantizing the values in the range 0-126 just do the following:

w = 126 / ( x + y + z ) xbits = x * w; ybits = y * w;

Now xbits is the ratio of x/(x+y+z) quantized in the range of 0-126, and ybits is the ratio y/(x+y+z). Given these two ratios we can unpack a vector colinear with the original vector like so:

x = xbits y = ybits z = 126 - x - y

Finally to get the original normal back, we just multiply the unpacked vector by the aproporiate scaling constant:

x *= scaling; y *= scaling; z *= scaling;

A quick review should convince you that both methods are in fact identical.

Implementation

#ifndef _3D_UNITVEC_H

#define _3D_UNITVEC_H

#include

#include

#include "3dmath/vector.h"

#define UNITVEC_DECLARE_STATICS \

float cUnitVector::mUVAdjustment[0x2000]; \

c3dVector cUnitVector::mTmpVec;

// upper 3 bits

#define SIGN_MASK 0xe000

#define XSIGN_MASK 0x8000

#define YSIGN_MASK 0x4000

#define ZSIGN_MASK 0x2000

// middle 6 bits - xbits

#define TOP_MASK 0x1f80

// lower 7 bits - ybits

#define BOTTOM_MASK 0x007f

// unitcomp.cpp : A Unit Vector to 16-bit word conversion algorithm

// based on work of Rafael Baptista (rafael@oroboro.com)

// Accuracy improved by O.D. (punkfloyd@rocketmail.com)

//

// a compressed unit vector. reasonable fidelty for unit vectors in a

// 16 bit package. Good enough for surface normals we hope.

class cUnitVector : public c3dMathObject

{

public:

cUnitVector() { mVec = 0; }

cUnitVector( const c3dVector& vec )

{

packVector( vec );

}

cUnitVector( unsigned short val ) { mVec = val; }

cUnitVector& operator=( const c3dVector& vec )

{ packVector( vec ); return *this; }

operator c3dVector()

{

unpackVector( mTmpVec );

return mTmpVec;

}

void packVector( const c3dVector& vec )

{

// convert from c3dVector to cUnitVector

assert( vec.isValid());

c3dVector tmp = vec;

// input vector does not have to be unit length

// assert( tmp.length() <= 1.001f );

mVec = 0;

if ( tmp.x < 0 ) { mVec |= XSIGN_MASK; tmp.x = -tmp.x; }

if ( tmp.y < 0 ) { mVec |= YSIGN_MASK; tmp.y = -tmp.y; }

if ( tmp.z < 0 ) { mVec |= ZSIGN_MASK; tmp.z = -tmp.z; }

// project the normal onto the plane that goes through

// X0=(1,0,0),Y0=(0,1,0),Z0=(0,0,1).

// on that plane we choose an (projective!) coordinate system

// such that X0->(0,0), Y0->(126,0), Z0->(0,126),(0,0,0)->Infinity

// a little slower... old pack was 4 multiplies and 2 adds.

// This is 2 multiplies, 2 adds, and a divide....

float w = 126.0f / ( tmp.x + tmp.y + tmp.z );

long xbits = (long)( tmp.x * w );

long ybits = (long)( tmp.y * w );

assert( xbits < 127 );

assert( xbits >= 0 );

assert( ybits < 127 );

assert( ybits >= 0 );

// Now we can be sure that 0<=xp<=126, 0<=yp<=126, 0<=xp+yp<=126

// however for the sampling we want to transform this triangle

// into a rectangle.

if ( xbits >= 64 )

{

xbits = 127 - xbits;

ybits = 127 - ybits;

}

// now we that have xp in the range (0,127) and yp in the

// range (0,63), we can pack all the bits together

mVec |= ( xbits << 7 );

mVec |= ybits;

}

void unpackVector( c3dVector& vec )

{

// if we do a straightforward backward transform

// we will get points on the plane X0,Y0,Z0

// however we need points on a sphere that goes through

// these points.

// therefore we need to adjust x,y,z so that x^2+y^2+z^2=1

// by normalizing the vector. We have already precalculated

// the amount by which we need to scale, so all we do is a

// table lookup and a multiplication

// get the x and y bits

long xbits = (( mVec & TOP_MASK ) >> 7 );

long ybits = ( mVec & BOTTOM_MASK );

// map the numbers back to the triangle (0,0)-(0,126)-(126,0)

if (( xbits + ybits ) >= 127 )

{

xbits = 127 - xbits;

ybits = 127 - ybits;

}

// do the inverse transform and normalization

// costs 3 extra multiplies and 2 subtracts. No big deal.

float uvadj = mUVAdjustment[mVec & ~SIGN_MASK];

vec.x = uvadj * (float) xbits;

vec.y = uvadj * (float) ybits;

vec.z = uvadj * (float)( 126 - xbits - ybits );

// set all the sign bits

if ( mVec & XSIGN_MASK ) vec.x = -vec.x;

if ( mVec & YSIGN_MASK ) vec.y = -vec.y;

if ( mVec & ZSIGN_MASK ) vec.z = -vec.z;

assert( vec.isValid());

}

static void initializeStatics()

{

for ( int idx = 0; idx < 0x2000; idx++ )

{

long xbits = idx >> 7;

long ybits = idx & BOTTOM_MASK;

// map the numbers back to the triangle (0,0)-(0,127)-(127,0)

if (( xbits + ybits ) >= 127 )

{

xbits = 127 - xbits;

ybits = 127 - ybits;

}

// convert to 3D vectors

float x = (float)xbits;

float y = (float)ybits;

float z = (float)( 126 - xbits - ybits );

// calculate the amount of normalization required

mUVAdjustment[idx] = 1.0f / sqrtf( y*y + z*z + x*x );

assert( _finite( mUVAdjustment[idx]));

}

}

void test()

{

#define TEST_RANGE 4

#define TEST_RANDOM 100

#define TEST_ANGERROR 1.0

float maxError = 0;

float avgError = 0;

int numVecs = 0;

{for ( int x = -TEST_RANGE; x < TEST_RANGE; x++ )

{

for ( int y = -TEST_RANGE; y < TEST_RANGE; y++ )

{

for ( int z = -TEST_RANGE; z < TEST_RANGE; z++ )

{

if (( x + y + z ) == 0 ) continue;

c3dVector vec( (float)x, (float)y, (float)z );

c3dVector vec2;

vec.normalize();

packVector( vec );

unpackVector( vec2 );

float ang = vec.dot( vec2 );

ang = (( fabs( ang ) > 0.99999f ) ? 0 : (float)acos(ang));

if (( ang > TEST_ANGERROR ) | ( !_finite( ang )))

{

cerr << "error: " << ang << endl;

cerr << "orig vec: " << vec.x << ",\t" << vec.y << ",\t"

<< vec.z << "\tmVec: " << mVec << endl;

cerr << "quantized vec2: " << vec2.x << ",\t" << vec2.y << ",\t"

<< vec2.z << endl << endl;

}

avgError += ang;

numVecs++;

if ( maxError < ang ) maxError = ang;

}

}

}}

for ( int w = 0; w < TEST_RANDOM; w++ )

{

c3dVector vec( genRandom(), genRandom(), genRandom());

c3dVector vec2;

vec.normalize();

packVector( vec );

unpackVector( vec2 );

float ang =vec.dot( vec2 );

ang = (( ang > 0.999f ) ? 0 : (float)acos(ang));

if (( ang > TEST_ANGERROR ) | ( !_finite( ang )))

{

cerr << "error: " << ang << endl;

cerr << "orig vec: " << vec.x << ",\t" << vec.y << ",\t"

<< vec.z << "\tmVec: " << mVec << endl;

cerr << "quantized vec2: " << vec2.x << ",\t" << vec2.y << ",\t"

<< vec2.z << endl << endl;

}

avgError += ang;

numVecs++;

if ( maxError < ang ) maxError = ang;

}

{ for ( int x = 0; x < 50; x++ )

{

c3dVector vec( (float)x, 25.0f, 0.0f );

c3dVector vec2;

vec.normalize();

packVector( vec );

unpackVector( vec2 );

float ang = vec.dot( vec2 );

ang = (( fabs( ang ) > 0.999f ) ? 0 : (float)acos(ang));

if (( ang > TEST_ANGERROR ) | ( !_finite( ang )))

{

cerr << "error: " << ang << endl;

cerr << "orig vec: " << vec.x << ",\t" << vec.y << ",\t"

<< vec.z << "\tmVec: " << mVec << endl;

cerr << " quantized vec2: " << vec2.x << ",\t" << vec2.y << ",\t"

<< vec2.z << endl << endl;

}

avgError += ang;

numVecs++;

if ( maxError < ang ) maxError = ang;

}}

cerr << "max angle error: " << maxError

<< ", average error: " << avgError / numVecs

<< ", num tested vecs: " << numVecs << endl;

}

protected:

unsigned short mVec;

static float mUVAdjustment[0x2000];

static c3dVector mTmpVec;

};

#endif // _3D_VECTOR_H

Resources:

| Spherical Compression of Normal Vectors | Another method – higher accuracy – but more computationally intensive. |

| Fast Normal Vector Compression with Bounded Error | Another method, similar principle, higher accuracy, lower compression. |

| Method and apparatus for compressed 3d unit vector storage and retrieval | Another, patented method. |

| Method and apparatus for compressed data storage and retrieval | Yet another patented method. |

Clever. Talking about clever vector code, didn’t you use to have an article about extremely high-speed rough approximations to distance=sqrt(x^2+y^2)? http://en.wikibooks.org/wiki/Algorithms/Distance_approximations and http://en.wikipedia.org/wiki/Talk:Euclidean_distance#fast_2d_calculation_values both mention ” http://web.oroboro.com:90/rafael/docserv.php/index/programming/article/distance ” which gives me a “could not connect” error.

I did. I could re-post it, as the technique is a good one. But today it has limited application as even mobile processors have square root instructions now.

Ey, 789et. Not bad, not bad at all. It’s got that something-something that keeps you coming back. Give it a try and see what you think! Dive in here: 789et